Detailed Explanation of Faceswap Deep Learning AI for Video Face Swapping

Chunjing Muke will introduce the super popular black technology application deepfake, an app that achieves face swapping in images and videos. Some time ago, Wonder Woman Gal Gadot’s face was swapped into adult films, hahaha. Here, we will start from the GitHub project faceswap and step-by-step implement a video face-swapping tutorial.

Note:

This technology carries certain usage risks. This tutorial is for technical exchange only. Please do not use it for purposes where it shouldn’t be used.

Technical Analysis

The faceswap project is a deep learning algorithm that learns to reconstruct facial features. You feed it a bunch of images, and after several hours of training, it can distinguish which images are synthesized. But it doesn’t just replace similar images; it learns and analyzes different expressions, ultimately achieving a convincing effect by model replacement on given images. For a detailed analysis, you can refer to Reddit.

Imagine you could stare at someone continuously for 12 hours, observing their expressions and memorizing them. Then they ask you to draw them smiling, or sad, and you could instantly fulfill this request and achieve high-definition image quality. This is the power of deep learning.

Face swapping primarily trains on facial features, so the input images should only contain faces. Therefore, if the images are not faces, the faces need to be extracted before replacement, which was the reason for initial errors later on.

Detailed Steps for AI Face Swapping

Copy the Project Locally and Start Model Training

First, you need to install git. Use git clone to copy the faceswap project to your local machine. Download the test data from the files uploaded on this site. The faceswap project address is https://github.com/deepfakes/faceswap

git clone https://github.com/deepfakes/faceswap

cd faceswap

pip install -r requirements.txt

wget https://www.bobobk.com/wp-content/uploads/2019/01/input.zip

unzip input.zip

After unzipping, you will see input_A and input_B training data folders in the directory.

Since training is time-consuming, and even longer if your GPU isn’t powerful, it’s recommended to run it using screen. This tutorial uses a GTX1080ti for training. The code is as follows:

screen -S faceswap

python faceswap.py train

The model will be saved in the model folder. Here, I stopped training when the loss function was less than 0.03.

Input File Preparation

Once the model is trained, it’s time for the eagerly anticipated face-swapping process. Since our test data is Trump and Nicolas Cage, we first find a small, recent video clip. Video source: https://www.youtube.com/watch?v=S73swRzxs8Y. Since the model is only used for image replacement, we first need to convert the video into images. The tools.py file in the project can do this. Usage:

mkdir input

mkdir output

python tools.py effmpeg -i cage.mp4 -o input -s 00:0148 -e 00:0156

#### Video from 1 minute 48 seconds to 1 minute 56 seconds.

The command failed. Why? Because FFmpeg is not installed. One of its functions is to extract images from a video and synthesize images into a video. For installation, see https://cloud.tencent.com/developer/article/1027379. Here’s the CentOS 7 installation code directly:

yum install -y vim

yum install -y epel-release

rpm --import /etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7

yum repolist

rpm --import http://li.nux.ro/download/nux/RPM-GPG-KEY-nux.ro

rpm -Uvh http://li.nux.ro/download/nux/dextop/el7/x86_64/nux-dextop-release-0-1.el7.nux.noarch.rpm

yum repolist

yum update -y

yum install -y ffmpeg

ffmpeg -version

After installation, it should work. The cut images are in the input folder. Next is the conversion.

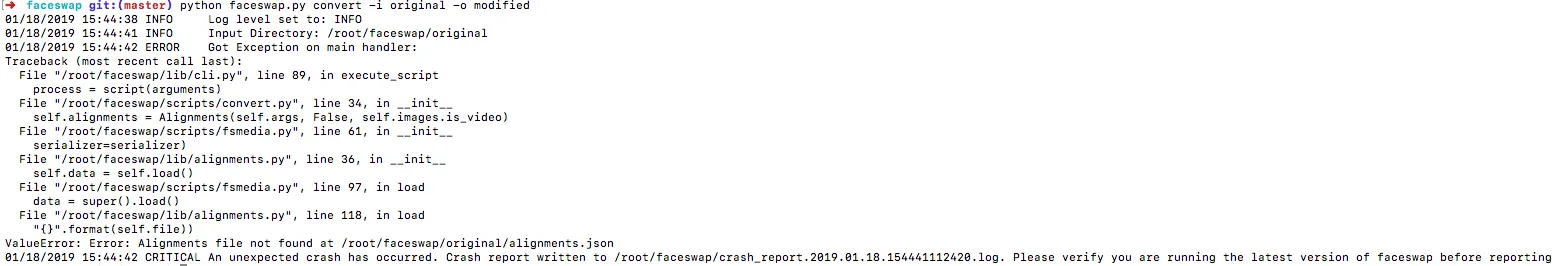

Running the command reported an error:

python faceswap.py convert -i original -o modified

The reason is the absence of comparison files. Thinking about it, our input is a single face image, but we’re giving it a frame from an entire movie. The comparison process involves first extracting the face to be replaced, so we need to perform face alignment first. This is done using the extract command in the project (initially, I thought extract meant to decompress files, hahaha).

To extract faces from the image files previously extracted from the video, use the following method:

python faceswap.py extract -i original -o input

Running the Trained Model for Face Swapping

Continue running the convert command:

python faceswap.py convert -i input -o output

You can see that the face-swapped images are being progressively outputted. It’s quite exciting!

Re-synthesize the face-swapped images back into a video:

Code:

python tools.py effmpeg -i output -r input/cage.mp4 -o modified/cage.mp4 -a gen-vid

The generated .mp4 is in the modified folder. Download and play it. It’s really enjoyable to watch.

Applications

Since its development, this technology has generated various reactions, with some fearing its power. Imagine a scenario where someone uses your likeness to publish a video that you never recorded.

Personally, I think this is a lot of fun. You can swap yourself into your favorite characters, like Harry Potter or something similar. Just thinking about it is interesting. Like in this article, the author swapped his wife onto a show host; how excited must his wife have been, hahahaha.

Summary

This article provides a step-by-step tutorial on how to use faceswap deep learning AI to achieve video face swapping, from model training and video file processing to image face swapping and re-synthesis into a generated video based on the original video. Theoretically, it can be applied to any video and any desired face to replace, as long as sufficient target models and training images for the model are provided. This is also why many celebrities’ faces have been swapped into adult films.

This face-swapping technology is believed to generate a large number of fun videos and applications in the future. Stay tuned!

Note: Since deep learning is used, a large amount of computational resources are required for model training. Therefore, it’s best to use a GPU or TPU for training.

- 原文作者:春江暮客

- 原文链接:https://www.bobobk.com/en/258.html

- 版权声明:本作品采用知识共享署名-非商业性使用-禁止演绎 4.0 国际许可协议进行许可,非商业转载请注明出处(作者,原文链接),商业转载请联系作者获得授权。